For all their apparent competence,

|

| P/C Dalle-D. Prompt: computational oracles answering questions rendered as an expressive oil painting set on a sweeping landscape |

... when you get down to asking specific, verifiable questions about people, the LLMs are not doing a great job.

As a friend once said to me about LLMs: "it's all cybernetic mansplaining."

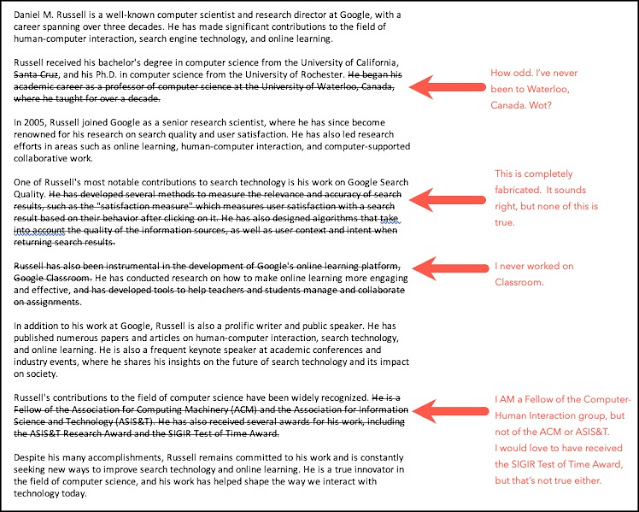

When I asked ChatGPT-4 to "write a 500-word biography of Daniel M. Russell, computer scientist from Google," I got a blurb about me that's about 50% correct. (See below for the annotated version.)

When I tried again, modifying the prompt to include "... Would you please be as accurate as possible and give citations?" the response did not improve. It was different (lots of the "facts" had changed), and there were references to different works, but often the cited works didn't actually support the claims.

So that's pretty disappointing.

But even worse, when I asked Bard for the same thing, the reply was

"I do not have enough information about that person to help with your request. I am a large language model, and I am able to communicate and generate human-like text in response to a wide range of prompts and questions, but my knowledge about this person is limited. "

That's odd, because when I do a Google search for

[ Daniel M. Russell computer scientist ]

I show up in first 26 positions. (And no, I didn't do any special SEO on my content.)

But to say "I do not have enough information about that person.." is just wrong.

I tested the "write a 500 word biography" prompt on Bard--it only generates them for REALLY well known people. Even then, when I asked for a bio of Kara Swisher, the very well-known reporter and podcaster, several of the details were wrong. I did a few other short bios of people I know well. Same behavior every single time. Out of the 5 bios I tried, none of them were blunder-free.

Bottom line: Don't trust an LLM to give you accurate information about a person. At this point, it's not just wrong, it's confidently wrong. You have to fact-check every single thing.

Here's what ChatGPT-4 says about me. (Sigh.)

Keep searching. Really.

am flummoxed

ReplyDeleteseems kinda abstract… (a collaboration with NANI)

https://imgur.com/a/n4n1XqP

trudat:

"Bottom line: Don't trust an LLM to give you accurate information about a person. At this point, it's not just wrong, it's confidently wrong. You have to fact-check every single thing. "

Love the image. Thanks!

Deletemight be useful - beware "cheeky monkeys" but listen to them as well.

ReplyDeletehttps://www.scientificamerican.com/article/how-to-tell-if-a-photo-is-an-ai-generated-fake/

https://www.scientificamerican.com/article/how-my-ai-image-won-a-major-photography-competition/

don't know how abstract expressionism would have occurred if it needed a written description/prompt…

ReplyDeleteJackson Pollack wasn't much of a keyboarder… or even voice input

amazing views

the old ways of image alteration/creation

Good point. Maybe "random drips of paint over a blank canvas repeatedly until the canvas is nearly obscured"?

Delete