In this week's Challenge ...

I asked two questions that seem different, but are really both examples of web scraping. When I realized they could both be done using the same tool... well, that's too much of a chance to demonstration how to use web scraping in your day-to-day SearchResearch!

I asked these questions which are both "can you grab this data from a web site, and then make these charts?"

In the comments, Ramón got us off to a good start by pointing out that both of these problems required grabbing data from websites, which he pointed out is called "web scraping." That's how I started this Challenge solution as well, by searching for web scraping tools. (I'll write another post about web scraping generally... but this is what I did for this Challenge.)

[ web scraping tool ]

As you can see, there are many, many tools to do this--I just chose the first one, Import.io, as a handy way to scrape the internship data and the Ikea catalog.

Web scraping is just having a program extract data from a web page. In the case of Import.io, you just hand it a URL and it pretty much just hands back a CSV file with the data in it.

So, for the first Challenge (finding cities with internships about "big data" that are in the Bay Area, but not in SF), we first have to find a web site that has internships listed. My query to find them was:

[ summer internships Mountain View ]

Why Mountain View in the query? Because it's pretty centrally located (and it's where I go to work everyday, so I have a local's interest), and I know there are a lot of large companies there with internship possibilities.

Looking at the list of results, I found Indeed.com, Jobs.com, InternMatch.com (and many others).

I decided to scrape the LinkedIn.com listings--again, because they're just down the street, and I happen to known they have a large interest in "big data" topics.

Their jobs site is: www.linkedin.com/job/ -- I just filled out the form for internships near Mountain View on the topic of "big data" and found a nice set of results.

Note that I set the "Location" filter to be "Mountain View" (I could have set it to any city in the Valley).

To scrape this data, I just grab the URL from the page:

https://www.linkedin.com/job/intern-%22big-data%22-jobs-mountain-view-ca/?sort=relevance&page_num=2&trk=jserp_pagination_2

And drop that into Import.IO -- and let it scrape out the data.

At the bottom of the Import.IO page there's a "Download..." option. I saved this data to a CSV file, and then imported that into a Google Spreadsheet, which gave me:

As you probably noticed, this is only 25 positions. I can easily go to the "next page" of results on the LinkedIn site, copy the URL, drop that into Import.IO and repeat. I did this, and appended the results to the bottom of the spreadsheet. (Shared spreadsheet link.)

In the spreadsheet you can see that I copied all of the city names and put them into Sheet 3 ("Cities"). I did a quick cleanup there, and created a second column "CityName" so I could then pull this spreadsheet into a new My Map. But FIRST I copied all of the Cities and CityNames into another spreadsheet with JUST the names of the cities in it. (Why? Because My Maps doesn't like to import spreadsheets with special characters in the columns. A fast workaround is to just make a new sheet with just the data--the city names--that I care about.)

So.. my new (city names only) spreadsheet looks like this:

And when you import that into a My Map, you get this map:

Note that there are many more cities in the spreadsheet than are shown here--there are a lot of duplicates. (I guess we could have looked-up the street addresses for each business, but that's too much like real work.)

And now that you know this method....

doing the Ikea sofas is just as straight-forward.

Go to the Ikea page and search for sofas. Grab the URL and paste into Import.IO -- that gives you another data table. Their URL looks like this:

http://www.ikea.com/us/en/search/?query=sofa&pageNumber=1

Notice that this is page 1 out of 36. It's kind of a hassle to get all 36 (but I'll write up how to do that in another post!), so let's get a few more and do the same "save as CSV."

Luckily, Import.IO has a "Save 5 pages" which automatically grabs the next 5 pages of Ikea data (just by changing the &pageNumber=1 argument in the URL above.

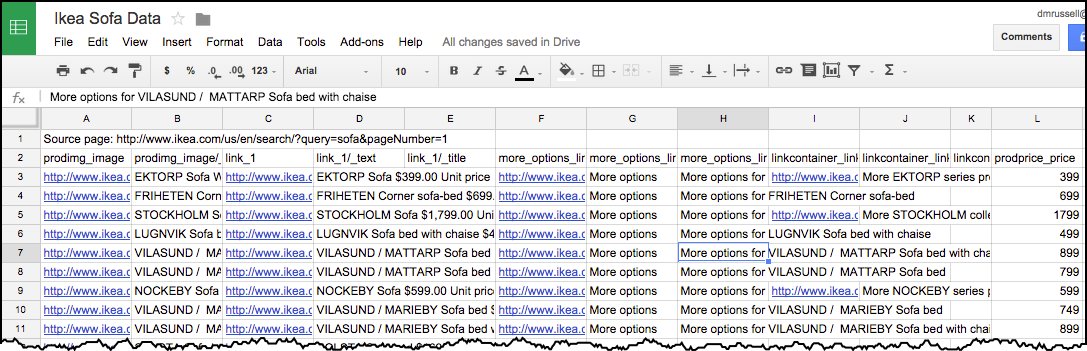

So by the time you save the CSV and import it into your spreadsheet, you'll have 120 rows of data. (For completeness, you could go to page 6, and then import the next 5 pages... but I'm happy with 120 samples of Ikea line of sofas.) Here's my spreadsheet:

And then you can easily copy out column L (prodprice_price) and do whatever kind of visualization you'd like, including the chart like that above, the chart below, or others:

Search Lessons:

1. Find the right tool. As I've said many times before, often the best way to start a complex project is to figure out what operation you're actually doing. (This particular task is called "web scraping.") And then find a tool that will help you out. In this case, Import.IO is perfect for the task. Be aware that there are many such tools--some of which might be better matches for the task you're doing. (It all depends on the details of what you're trying to do.)

2. Linking tools together. To solve this Challenge, you needed to not just extract the data, but also load it into your favorite spreadsheet, do a bit of cleaning, and then either visualize it with your spreadsheet charting tools, or export/import your data to My Maps (or whatever your intended end goal is).

Hope you enjoyed this Challenge as much as I did!

Search on!

______

Addendum: In response to a couple of questions from readers, I went back and made the map of "big data internships" truly interactive. Now if you click on a pin, you'll see the city, the company with the position, and a link to the job posting.

I asked two questions that seem different, but are really both examples of web scraping. When I realized they could both be done using the same tool... well, that's too much of a chance to demonstration how to use web scraping in your day-to-day SearchResearch!

I asked these questions which are both "can you grab this data from a web site, and then make these charts?"

1. Can you find (or create) a table of 50 summer internship positions in cities that are in Silicon Valley, and not in San Francisco? Ideally, you'd make an interactive map (like the one above), where you can click on the red button and read about the internship.

2. Can you make a chart like this one showing the price distribution of all the sofas in the Ikea catalog? (With the current catalog data.)

In the comments, Ramón got us off to a good start by pointing out that both of these problems required grabbing data from websites, which he pointed out is called "web scraping." That's how I started this Challenge solution as well, by searching for web scraping tools. (I'll write another post about web scraping generally... but this is what I did for this Challenge.)

[ web scraping tool ]

As you can see, there are many, many tools to do this--I just chose the first one, Import.io, as a handy way to scrape the internship data and the Ikea catalog.

Web scraping is just having a program extract data from a web page. In the case of Import.io, you just hand it a URL and it pretty much just hands back a CSV file with the data in it.

So, for the first Challenge (finding cities with internships about "big data" that are in the Bay Area, but not in SF), we first have to find a web site that has internships listed. My query to find them was:

[ summer internships Mountain View ]

Why Mountain View in the query? Because it's pretty centrally located (and it's where I go to work everyday, so I have a local's interest), and I know there are a lot of large companies there with internship possibilities.

Looking at the list of results, I found Indeed.com, Jobs.com, InternMatch.com (and many others).

I decided to scrape the LinkedIn.com listings--again, because they're just down the street, and I happen to known they have a large interest in "big data" topics.

Their jobs site is: www.linkedin.com/job/ -- I just filled out the form for internships near Mountain View on the topic of "big data" and found a nice set of results.

Note that I set the "Location" filter to be "Mountain View" (I could have set it to any city in the Valley).

To scrape this data, I just grab the URL from the page:

https://www.linkedin.com/job/intern-%22big-data%22-jobs-mountain-view-ca/?sort=relevance&page_num=2&trk=jserp_pagination_2

And drop that into Import.IO -- and let it scrape out the data.

At the bottom of the Import.IO page there's a "Download..." option. I saved this data to a CSV file, and then imported that into a Google Spreadsheet, which gave me:

As you probably noticed, this is only 25 positions. I can easily go to the "next page" of results on the LinkedIn site, copy the URL, drop that into Import.IO and repeat. I did this, and appended the results to the bottom of the spreadsheet. (Shared spreadsheet link.)

In the spreadsheet you can see that I copied all of the city names and put them into Sheet 3 ("Cities"). I did a quick cleanup there, and created a second column "CityName" so I could then pull this spreadsheet into a new My Map. But FIRST I copied all of the Cities and CityNames into another spreadsheet with JUST the names of the cities in it. (Why? Because My Maps doesn't like to import spreadsheets with special characters in the columns. A fast workaround is to just make a new sheet with just the data--the city names--that I care about.)

So.. my new (city names only) spreadsheet looks like this:

And when you import that into a My Map, you get this map:

Note that there are many more cities in the spreadsheet than are shown here--there are a lot of duplicates. (I guess we could have looked-up the street addresses for each business, but that's too much like real work.)

And now that you know this method....

doing the Ikea sofas is just as straight-forward.

Go to the Ikea page and search for sofas. Grab the URL and paste into Import.IO -- that gives you another data table. Their URL looks like this:

http://www.ikea.com/us/en/search/?query=sofa&pageNumber=1

Notice that this is page 1 out of 36. It's kind of a hassle to get all 36 (but I'll write up how to do that in another post!), so let's get a few more and do the same "save as CSV."

Luckily, Import.IO has a "Save 5 pages" which automatically grabs the next 5 pages of Ikea data (just by changing the &pageNumber=1 argument in the URL above.

So by the time you save the CSV and import it into your spreadsheet, you'll have 120 rows of data. (For completeness, you could go to page 6, and then import the next 5 pages... but I'm happy with 120 samples of Ikea line of sofas.) Here's my spreadsheet:

And then you can easily copy out column L (prodprice_price) and do whatever kind of visualization you'd like, including the chart like that above, the chart below, or others:

Such as a histogram of prices (in $150 / bucket price-ranges). You can see here where the bulk of their product lies. Ikea has a target audience in mind, but also carries a few rather expensive items as well.

Search Lessons:

1. Find the right tool. As I've said many times before, often the best way to start a complex project is to figure out what operation you're actually doing. (This particular task is called "web scraping.") And then find a tool that will help you out. In this case, Import.IO is perfect for the task. Be aware that there are many such tools--some of which might be better matches for the task you're doing. (It all depends on the details of what you're trying to do.)

2. Linking tools together. To solve this Challenge, you needed to not just extract the data, but also load it into your favorite spreadsheet, do a bit of cleaning, and then either visualize it with your spreadsheet charting tools, or export/import your data to My Maps (or whatever your intended end goal is).

Hope you enjoyed this Challenge as much as I did!

Search on!

______

Addendum: In response to a couple of questions from readers, I went back and made the map of "big data internships" truly interactive. Now if you click on a pin, you'll see the city, the company with the position, and a link to the job posting.