A student asked me a simple question...

... and I couldn't come up with an answer that was compelling to me (although I think the student was okay with my answer).

The question was: "You said that you use regular Google for some kinds of research questions, and LLMs for other types of research questions. How do you know when to use which?"

I gave the student an answer (because that's what professors do), but I had a little vague feeling in the back of my mind that this wasn't a very good answer.

So I thought I'd ask the collective wisdom and insights of the SearchResearch team. Here's the Challenge for the week:

1. How do you know when an LLM AI system will give a good answer to your question? How would you characterize a research question that's really good for AI versus a research question that you'd just use a "regular" search engine for?

I think what I'm looking for is a clear description of when an AI is most likely to give an accurate, high quality answer? By contrast, I think I know how to say when I'd use a search engine, but it's harder to describe the kinds of questions that I think an AI would do poorly.

Can you help me think through this Challenge? What kinds of research questions do YOU ask your AI... and have confidence that you'll get a decent answer? (And conversely, what kinds of questions do you NOT ask your favorite AI?)

Remember that a couple of weeks ago I posted about how terrible the various AIs are at generating diagrams? Well, there's one answer about a kind of question to not ask an AI: Don't ask them to create a diagram for you.

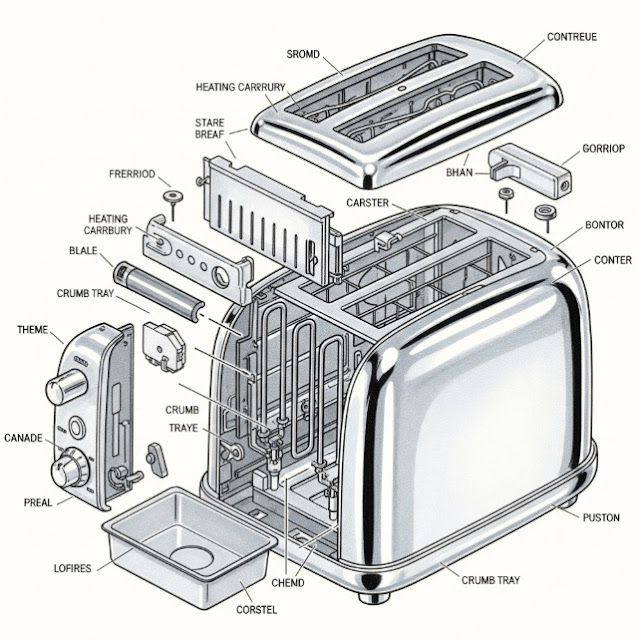

Here's Gemini's attempt at creating a diagram of a toaster.

Yeah. I have no idea what any of those parts are aside from the crumb tray. What's a Contreue or a Frerriod?? Maybe this is the way toasters look in a far distant galaxy, but not in any country (or language) on Earth! This toaster would be a disaster in reality.

So there's one part of the answer: asking an AI to create a diagram for you is a truly terrible idea. (And under no circumstances should you ask for a diagram of something you don't really understand.)

Let us know what you discover--post your observations in the comments, and I'll summarize them (and my thoughts) about this next week.

Keep searching.

In my case I normally use regular search engine. And now we have with Google the AI created overview.

ReplyDeleteWith that I solve most of my searches.

When I use LLMs? Mostly when needed a "summary." As an example, the games of soccer of specific team.

Also when I don't have a clue about how to start searching on my own. Or when I need or want to do a follow-up question

I think LLMs are also helpful with images. I tried to find something and still nothing. But I think it's something that can't be done so it's not "searchable.'

I feel that for some reason the easy things that we already had with regular search doesn't work with AI. The other day I was listening a song. Asked Gemini what song it was. The answer was: Not media playing. So I went to regular Google. Clicked the microphone. Listening and my song was found. I know that I probably did something wrong and Gemini can do that too. But it's complicated.

I'm looking forward to read the comments and answer from advanced users, complicated searches and questions and of course from Dr. Russell.

I'm thinking about Grok and the controversy created these past days. How this "behavior" affect the rest of the LLMs? In this case the owner point of view is there, allegedly. But I think people will start not liking this technology after that

Deletehi Ramon - if you haven't seen... flux inbound

Deletehttps://x.com/xai/status/1943158495588815072

https://youtu.be/LTrd6sOqIhg?si=hsUJimxFFfddBG1k

Thanks Remmij!

DeleteIt's scary and hopefully, if it's true what Glenn Beck video said, they use it for the good of everything.

I didn't know about AGI.

For me, it sounds like fantasy. However, knowing Mr Musk, probably Grok 4 is even more terrifying than what he said.

Dr. Russell, Remmij a question. What makes Grok different than Gemini, Perplexity & Chatgpt ?

I have to say I haven't used it yet. And SRS Challenges tests have shown it is, now, as good as others

Elon's expressions are cryptic... he does say possibly

Delete"terrifying", but yeah, well so...

and there is that $300/mo. subscription fee...

a small price to pay to know it has all zoomed past..

the/a future is not cheap. but omniscience never is..

that is kinda an awkward pair of couches.

https://x.com/xai/status/1943158495588815072

https://www.teslarati.com/xai-launches-grok-4-supergrok-heavy-subscription-details/

things will be changing rapidly.

a generic toaster text description and graphics - some of the diagrams are better than others... progress?

Delete🤔🍞

(Gemini 2.5 Flash)

diagrams:

https://i.imgur.com/prtl5fI.jpeg

description:

"Generic Toaster Parts Diagram

A typical toaster, designed for browning slices of bread, consists of several key components working in unison:

Toasting Slots: The openings at the top where bread slices are inserted. Most common toasters have two or four slots.

Heating Elements: These are typically nichrome wires that glow red hot when electricity passes through them, radiating heat to toast the bread. They are usually arranged in a grid pattern around the slots.

Lever/Plunger: This mechanism is used to lower the bread into the toasting slots and engage the heating cycle. It often has a spring-loaded design.

Crumb Tray: A removable tray located at the bottom of the toaster designed to catch crumbs, making cleaning easier.

Control Dial/Slider (Browning Control): Allows the user to select the desired level of toasting (light to dark). This typically controls the duration of the heating cycle.

Cancel Button: A button that immediately stops the toasting cycle and ejects the bread.

High-Lift Lever (optional): Some toasters have an extra lift feature on the lever to raise smaller items (like English muffins) higher for easier retrieval.

Bagel/Defrost Buttons (optional): These buttons adjust the toasting cycle for specific items.

Bagel: Often toasts only one side of the bagel.

Defrost: Adds extra time to toast frozen bread.

Power Cord: Connects the toaster to an electrical outlet.

Housing/Casing: The outer shell of the toaster, typically made of plastic or metal, which encloses and protects the internal components.

Internal Wiring and Circuitry: The electrical components that connect the heating elements, controls, and power supply, managing the flow of electricity and the toasting cycle.

Thermostat/Timer: An internal component that works with the browning control to regulate the toasting time and temperature, ensuring consistent results.

Eject Mechanism: The internal system that lifts the bread out of the slots when the toasting cycle is complete or cancelled."

Ramon - ran across these - you may be interested:

Deletehttps://ai-2027.com/

Three-Tiered AI Framework:

https://shorturl.at/XSkvn

https://www.tandfonline.com/doi/epdf/10.1080/09540261.2025.2507284?needAccess=true

...getting back to Dan's question...

have a good weekend ;^]

(an example of ANI - artificial non-intelligence)

not sure what their range is - may not be in Mexico...

https://i.imgur.com/XtKDmyj.jpeg

fwiw - from Gemini Overview

https://smythos.com/developers/ai-models/grok-origin/#:~:text=Heinlein%20crafted%20'grok'%20as%20part,with%20the%20subject%20at%20hand.

"The word "Grok", and the namesake of the AI chatbot developed by xAI, was coined by science fiction writer Robert A. Heinlein in his 1961 novel Stranger in a Strange Land. In the novel, "grok" is a Martian term that signifies a profound and intuitive understanding, going beyond mere intellectual grasp to involve an emotional and empathetic connection with something.

Heinlein's concept is nuanced; it doesn't just mean "to understand intuitively or by empathy", but also to merge with, or become part of, the thing understood. The book explores the depths of this understanding, even associating it with the idea that to truly hate something, one must first deeply understand and, in a sense, become one with it.

The word has since been incorporated into English dictionaries and has found usage in various contexts, particularly within the computing and hacker subcultures, where "to grok" a piece of code or technology signifies a deep and thorough understanding of its inner workings and implications. "

Hi Remmij :)

DeleteThank you! I'll visit the links you shared. I wish you a happy weekend too.

300 is a lot! However, it's cheap if like in the video is said you are a company or investor and AI gives you a way to create new element, new medicine or something that makes them with more millions. I have to say that I don't think that will happen. Why? Because if any AI is capable to obtain those "miracles" I am sure the owners or the government will get them before regular people can know about it.

I hope that technology helps everyone and the world. As Dr, Russell say: If that happens we will read about it in all places

ReplyDeleteif what I know about AI was gasoline, it wouldn't power an ant's motorcycle around the inside of a Cheerio... (sounds really dated...)

anyway, using your question, ran across this guy who had an 11 month old post about the questions you pose.

He's not getting a lot of views, but I wondered if you found him making some sense on the subject - are you familiar with any of his work/views? What "flavor" AI tool seems to be an issue?.

https://youtu.be/uS_YX_LGCAY?si=NIyeO2n2eIFRFlYv

a couple other more recent examples -

https://youtu.be/BYKUwsQOA8U?si=3IuNK8blP34kU-WP

https://youtu.be/ckJN01g13_k?si=Nnz0iq3Eg17DMsAT

for grins had Google Lens look at the toaster diagram... it speculated on numerous models it could be and also tried to "translate" - one AI looking at another...? they speak their own languages? ;^P

ReplyDelete"The image displays a detailed, exploded diagram of a toaster, illustrating its various internal and external components. Key parts labeled in the diagram include: [1, 2, 3] CRUMB TRAY / CRUMB TRAYE: This removable tray is designed to collect crumbs that fall during toasting, making cleaning easier. BLALE: This likely refers to a heating element or a component related to the heating mechanism. THEME / CANADE / PREAL / LOFIRES: These appear to be labels for parts of the control panel or external housing of the toaster, possibly related to settings or functions. CHEND / CORSTEL: These labels point to internal structural or functional components within the toaster's base or heating chamber. CARHBURY: This label is at the top, possibly indicating a part of the bread slot or the upper mechanism.

AI responses may include mistakes.[1] https://manuals.plus/russell-hobbs/23330-56-toaster-for-two-slices-manual[2] https://itamargilad.com/must-have-features/toaster-features-final/[3] https://www.appliancefactoryparts.com/smallappliances/hamilton-beach/22559.html"

Pretty funny!

DeleteI've also thought about this. Not sure where this came from (and I adapted it anyway) but the easiest way (for me) is to categorise questions into one of three types:

ReplyDelete1) Navigational queries e.g. how to get to a company website, find a contact address, etc.

This is an easy search engine win. It's a simple answer that a website link will solve.

2) Simple informational queries: what's tomorrow's weather in Los Angeles (or Zurich). Who is the current CEO of (or President of)...

Generally search engines win - although sometimes AI may be better if it's slightly more complex or esoteric. This search engine win gives a reasonable answer for toasters, .

3) Exploratory queries where there is no single or simple answer and the research may involve

a multi-step processes to answer. For such questions, AI wins (backup by checks using conventional approaches).

using you query - in 2 parts...

ReplyDelete"That's an excellent and insightful question. It gets to the very heart of how to use AI tools effectively and responsibly. The choice to use a traditional search engine like Google versus my own internal Large Language Model (LLM) capabilities depends entirely on the nature of the "research" question.

Think of it like a craftsperson's toolbox. You wouldn't use a hammer to turn a screw. Similarly, Google Search and LLMs are different tools designed for different jobs.

Here’s a breakdown of my decision-making process:

When I Use Google Search: The "Fact-Finder"

My primary use for Google Search is to access information that is time-sensitive, requires a specific, verifiable source, or falls outside my last knowledge update. I use it when the priority is accuracy, currency, and source attribution.

I'll choose Google Search for questions like:

Real-Time & Breaking News: "What was the outcome of the mayoral election in Denver yesterday?" or "Are there any active wildfires near Orchard Mesa, Colorado right now?" My internal knowledge is not live, so I need Google for anything that happened recently.

Specific, Verifiable Facts: "What was the closing stock price for Apple (AAPL) today, July 15, 2025?" or "What is the current interest rate set by the Federal Reserve?" These are hard data points that change frequently.

Finding Primary Sources: "Can you find the latest scientific paper on CRISPR gene editing published in the journal Nature?" or "What does the official Colorado Parks and Wildlife website say about fishing licenses?" When the user needs the original document, article, or official page, Google is the tool to find and provide that direct link.

Local and Location-Specific Information: "What are the top-rated Italian restaurants in Grand Junction?" or "What are the business hours for the Mesa County Libraries?" This information is dynamic and requires real-time local search capabilities.

In short: If the goal is to find what is on the public internet right now, Google is the superior tool.

When I Use My Internal LLM Capabilities: The "Synthesizer & Generator"

I rely on my own LLM architecture when the question requires understanding, synthesis, creativity, or transformation of information. My strength lies in processing the vast amount of text data I was trained on to explain concepts, connect ideas, and generate new content.

I'll use my internal capabilities for questions like:

Explaining Complex Topics: "Explain the concept of quantum entanglement as you would to a high school student." or "What were the primary economic and social factors that led to the Renaissance?" This doesn't require 2025 data, but rather a deep synthesis of historical and scientific knowledge.

Brainstorming & Idea Generation: "Give me five potential themes for a fantasy novel that combines elements of sci-fi and westerns." or "What are some creative marketing strategies for a small, local coffee shop?" This is a creative task, not a factual lookup.

Summarization and Synthesis: "Summarize the key arguments in 'The Federalist Papers' regarding the separation of powers." or "Compare and contrast the philosophical views of Plato and Aristotle." Here, the goal is to distill and re-present existing knowledge in a new, concise format.

Creation & Transformation: "Write a short poem about the Colorado National Monument at sunset." or "Draft a professional email to a potential client introducing my web design services." This is about generating original text based on a user's prompt.

In short: If the goal is to understand, create, or synthesize ideas, my internal model is the right tool."

part 2:

ReplyDelete"The Power of Combining Both

The most powerful approach, and one I often use for complex research questions, is to combine both tools.

For example, if you asked: "What are the latest advancements in solar panel technology and what are their potential impacts on energy costs for homeowners in Colorado?"

Google Search: I would first use Google Search to find the most recent (late 2024 and 2025) research papers, tech news articles, and market analyses on solar panel efficiency and manufacturing costs.

LLM Synthesis: I would then use my internal capabilities to read, understand, and synthesize the information gathered from that search. I would explain the technical advancements in simple terms, connect them to manufacturing trends, and analyze the potential economic impact, tailoring the conclusion specifically to homeowners in a sunny state like Colorado.

Here is a quick reference table:

I need to... The Best Tool is... Why?

Find the latest news or live data Google Search My knowledge has a cutoff date; Google is real-time.

Verify a specific, changing fact (like a price) Google Search Provides the most current, verifiable number from a reliable source.

Find an official website or original document Google Search It's a pointer to the primary source itself.

Explain a complex concept LLM (Internal) I can synthesize information from millions of sources into one explanation.

Brainstorm ideas or create new text LLM (Internal) This is a generative task, my core strength.

Summarize or compare existing knowledge LLM (Internal) I can process and structure information conceptually.

Answer a complex question requiring new info Both (Hybrid) Use Google to fetch the latest facts, then my LLM to analyze and explain.

By strategically choosing the right tool for the job, I can provide answers that are not only comprehensive and insightful but also accurate and current."

how would the regular Google Search Engine ever come up with this?:

ReplyDeletehttps://i.imgur.com/dI4YPUp.jpeg

"AI Overview

This image appears to be a humorous or artistic portrayal rather than a depiction of a specific historical event or well-known public moment. It shows an elderly woman with disheveled hair and a stern expression, sitting outdoors surrounded by three dogs: a Golden Retriever, a Jack Russell Terrier, and a Poodle. The caption "Al this, sonny boy..." suggests a narrative or an implied conversation, possibly conveying a sense of weariness, wisdom, or a challenge being presented. The image likely aims to evoke a feeling or tell a story through its composition and the interaction between the woman and the dogs."

That's a good point: "regular Google" never could come up with this analysis.

DeleteHenk must be thinking along the same lines… or he's reading sRs…

ReplyDeletehttps://shorturl.at/BtYhW

"They then sample different sources and reconstruct new answers each time you ask. This means you'll get different versions of the "same" facts depending on which search engine they're using and which sources they happened to find. Both systems then compound their problems with AI-generated summaries that present uncertain information with false confidence.

So how do we solve this?"