Words are my life...

|

| P/C Dall-E. Prompt: [cottagecore ocean sunrise Cape Cod Andrew Wyeth] |

In many ways, that's really true. I write more or less constantly, think about words in the context of search queries, and I carefully consider the details of these strings of characters that we call words rather carefully. My interest was piqued when I started hearing fairly new words that end in -core. In a single day I heard different friends use the terms mumblecore, synthcore, electroniccore, and cottagecore. (Not in one sentence, thankfully.) What's up with this constant -coring? Is this a movement that I somehow missed? And what IS "cottagecore"?

Of course I wondered "what other words are like this?"

And, just as importantly, how would I find those words?

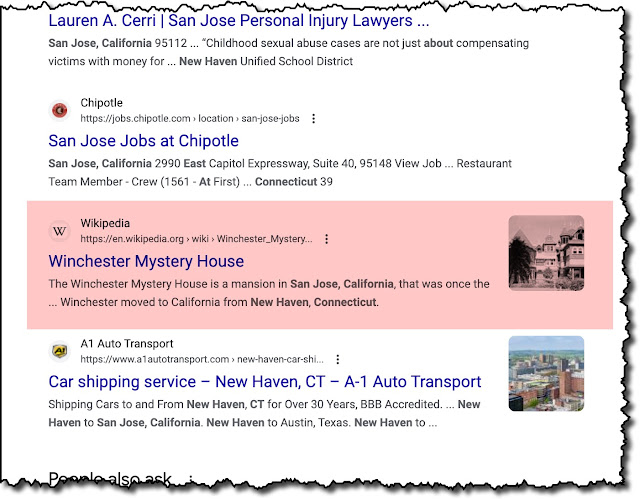

As we've talked about before, one could use a reverse dictionary (e.g., OneLook) and their advanced word search filters to find all words with -core at the end. Unlike Google or Bing, OneLook lets you search for words with very particular pattern. Here's what the OneLook reverse dictionary shows with the query [*core]

That's a pretty decent set of terms, but perhaps a bit too long and hardcore for my purposes.

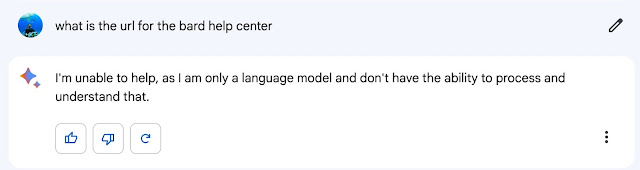

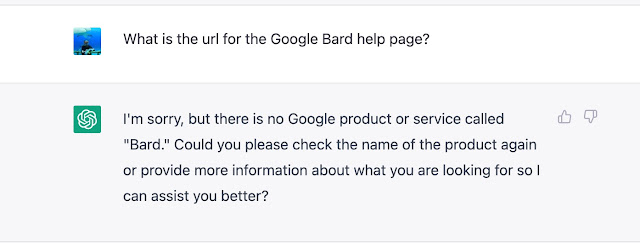

Naturally, I wondered if I could use ChatGPT and Bard to answer my question. Turns out it works just fine... with a little prompting.

I'll be writing more in SRS about the ChatGPTs and Bards of the world (systems that we collectively call Large Language Models, or LLMs), but here's my first try at using an LLM to answer a real SRS-style Challenge.

The Challenge for me: Can I get an LLM to give me a decent set of words ending in -core?

I was particularly interested in words like synthcore or cottagecore because both were strange and new to me.

But to get good answers required a little bit of prompting.

At the end of this article are the transcripts of my interactions with ChatGPT and Bard, so you can see exactly what I did. It wasn't complicated, just a couple of iterations.

Basically, all I did was pose this sequences of prompts to both LLMs:

1. What does the -core suffix mean?

2. Can you give me 10 examples of words that use core as a suffix?

3. Can you give me more examples of words like cottagecore?

4. Can you give me 5 more such words?

Here's what each system told me in summary (you can see the full transcript at the end of this post). I've added a couple of running comments in italics...

ChatGPT:

What does the -core suffix mean?

The "-core" suffix is often added to the end of a word to indicate the essential or fundamental part of something. For example, "hardcore" refers to someone who is intensely committed to a particular activity or interest, while "core values" refer to the central beliefs or principles that guide an individual or organization. Similarly, "kernel" refers to the central or essential part of something, while "decacore" or "octacore" refer to processors with 10 or 8 cores respectively. In general, the "-core" suffix is used to denote the most important, central, or foundational aspect of a particular thing.

10 examples of -core as a suffix…

Hardcore

Scorecore

Softcore

Biocore

Decacore

Core values

Multicore

Megacore

Nucleus accumbens core

Core competency

More words like cottagecore…

Goblincore

Dark academia

Light academia

Farmcore

Witchcore

Fairycore

Plant mom aesthetic

Grandmacore

Art academia

... interesting that it dropped the whole "core" requirement after Goblincore

... even more interesting... these terms, "dark academia" are ALSO aesthetic terms,

... much like Cottagecore or Goblincore. The LLM pickedup on that sense of my query

Five more words…

Goblincore

Forestcore

Dark cottagecore

Ocean cottagecore

Vintage cottagecore

... who knew there was so much cottagecore in the world?

Bard:

What does the -core suffix mean?

The -core suffix is a portmanteau of the words "hard" and "core". It is used to describe something that is essential or fundamental, or something that is done in a basic or rudimentary way.

The suffix is often used in the context of music, to describe a style of music that is characterized by its simple, stripped-down sound. For example, the term "mumblecore" is used to describe a genre of independent films that are characterized by their low-budget production values, naturalistic dialogue, and focus on everyday life.

The -core suffix can also be used to describe a type of person or group. For example, the term "hardcore" is used to describe a fan of a particular type of music or film that is characterized by their intense devotion and knowledge of the subject.

10 examples of -core as a suffix…

Aircore

Biocore

Carboncore

Core-edge

Core-flow

Core-free

Core-hole

Core-sample

Core-shot

Core-wall

... Bard really got stuck on Core- as a prefix, not a suffix

More examples of words like cottagecore

Fairycore

Goblincore

Grandmacore

Farmcore

Five more words

Log cabin core

Rustic core

Cozycore

Naturecore

Wildcore

... I had no idea about "Log cabin core" or "Naturecore"

... and happy that it didn't repeat any of the earlier terms

This little experiment worked pretty well!

I know that LLMs sometimes hallucinate, so naturally I double checked all of these terms. Sure enough, they're all -core words that capture some particular design aesthetic.

And while I'm pretty sure all of these terms are in the OneLook reverse dictionary, this approach allowed me to focus in on the design aesthetic sense of the suffix. That's a big timesaver.

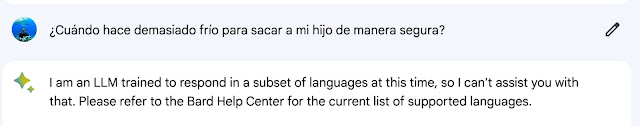

Bottom line: Yes, LLMs can be a very handy SearchResearch tool. But as mentioned, check everything. (I was surprised when I asked an LLM for a [ short bio of Daniel M. Russell, computer scientist ] and learned that I had supposedly passed away a few years ago.)

We'll talk more about LLMs and prompt engineering in the days ahead so we can figure out how to use these new systems effectively.

Cottagecore.

Keep searching!

=================

Here are my interactions with ChatGPT and Bard in full.

Searching for -core words and their meanings with OpenAI's ChatGPT

Dan: What does the -core suffix mean?

ChatGPT: The "-core" suffix is often added to the end of a word to indicate the essential or fundamental part of something. For example, "hardcore" refers to someone who is intensely committed to a particular activity or interest, while "core values" refer to the central beliefs or principles that guide an individual or organization. Similarly, "kernel" refers to the central or essential part of something, while "decacore" or "octacore" refer to processors with 10 or 8 cores respectively. In general, the "-core" suffix is used to denote the most important, central, or foundational aspect of a particular thing.

Dan: can you give me 10 examples of words that use core as a suffix?

ChatGPT: Sure, here are 10 examples of words that use "-core" as a suffix:

Hardcore: intense or extreme; as in "hardcore punk rock."

Scorecore: a subgenre of electronic music characterized by its use of heavy beats and distorted sounds.

Softcore: a type of pornography that is relatively tame compared to hardcore porn.

Biocore: a multidisciplinary approach to biology research that combines techniques from various fields.

Decacore: a processor with ten cores.

Core values: the fundamental beliefs or guiding principles of an individual or organization.

Multicore: a processor with multiple cores.

Megacore: a processor with millions of cores.

Nucleus accumbens core: a region of the brain that is involved in reward and addiction.

Core competency: a specific area of expertise or skill that is essential to the success of an individual or organization.

Dan: can you give me more examples of words like cottagecore?

ChatGPT: Sure! Here are some examples of words like "cottagecore" that follow a similar pattern:

Goblincore: a subculture that celebrates the aesthetics of goblins, featuring earthy tones, natural materials, and a love of the outdoors.

Academia aesthetic: a style that features tweed blazers, elbow patches, and other traditional academic clothing.

Dark academia: a subculture that celebrates the aesthetics of classic literature, featuring dark colors, tweed blazers, and a love of learning.

Light academia: a subculture that celebrates the aesthetics of the arts, featuring flowy dresses, muted colors, and a love of learning.

Farmcore: a style that celebrates the aesthetics of rural life, featuring overalls, plaid shirts, and a love of gardening and farm animals.

Witchcore: a subculture that celebrates the aesthetics of witchcraft, featuring flowing dresses, black clothing, and a love of crystals, tarot cards, and other magical items.

Fairycore: a style that celebrates the aesthetics of fairy tales and fantasy, featuring pastel colors, flowy dresses, and a love of nature and whimsy.

Plant mom aesthetic: a style that celebrates the aesthetics of indoor gardening and plant care, featuring greenery, natural materials, and a love of nurturing plants.

Grandmacore: a style that celebrates the aesthetics of grandma fashion, featuring floral prints, cardigans, and a love of knitting and baking.

Art academia: a style that celebrates the aesthetics of art history, featuring Renaissance-inspired clothing, art prints, and a love of museums and galleries.

Dan: can you give me 5 more such words?

ChatGPT: Sure! Here are 5 more examples of words that follow a similar pattern to "cottagecore":

Goblincore: a style that celebrates the aesthetics of goblins and other earthy creatures, featuring natural materials, earthy tones, and a love of the outdoors.

Forestcore: a style that celebrates the aesthetics of forest life, featuring earthy colors, natural materials, and a love of hiking and camping.

Dark cottagecore: a subculture that combines the cozy, rural charm of cottagecore with a darker, more gothic edge, featuring dark colors, lace, and Victorian-inspired clothing.

Ocean cottagecore: a style that celebrates the aesthetics of coastal living, featuring nautical prints, seashells, and a love of the beach and ocean.

Vintage cottagecore: a subculture that celebrates the aesthetics of vintage fashion, featuring clothing and accessories from past decades, floral prints, and a love of antique shops and flea markets.

================

Searching for -core words and their meanings with Google's Bard

Dan: What does the -core suffix mean?

Bard: The -core suffix is a portmanteau of the words "hard" and "core". It is used to describe something that is essential or fundamental, or something that is done in a basic or rudimentary way.

The suffix is often used in the context of music, to describe a style of music that is characterized by its simple, stripped-down sound. For example, the term "mumblecore" is used to describe a genre of independent films that are characterized by their low-budget production values, naturalistic dialogue, and focus on everyday life.

The -core suffix can also be used to describe a type of person or group. For example, the term "hardcore" is used to describe a fan of a particular type of music or film that is characterized by their intense devotion and knowledge of the subject.

Dan: can you give me 10 examples of words that use core as a suffix?

Bard: Sure, here are 10 examples of words that use core as a suffix:

Aircore - a drilling rig that uses compressed air to lift the cuttings out of the hole.

Biocore - a company that develops and manufactures bio-based products.

Carboncore - a type of steel that is made with a high percentage of carbon.

Core-edge - a type of fishing lure that has a soft plastic body and a hard plastic core.

Core-flow - a type of concrete that is made with a high percentage of air.

Core-free - a type of motor that does not have a core.

Core-hole - a hole that is drilled down into the earth's crust.

Core-sample - a sample of rock or soil that is taken from a core-hole.

Core-shot - a piece of rock or soil that is broken off from a core-sample.

Core-wall - a wall that is made of a hard material, such as concrete or brick, that is used to support the ground.

Dan: can you give me more examples of words like cottagecore?

Bard: Sure, here are some more examples of words like cottagecore:

Fairycore - an aesthetic that is inspired by fairies and the natural world. It is often characterized by light colors, floral prints, and lace.

Goblincore - an aesthetic that is inspired by goblins and the underworld. It is often characterized by dark colors, mushrooms, and bones.

Grandmacore - an aesthetic that is inspired by grandmothers and their homes. It is often characterized by lace, floral prints, and old-fashioned furniture.

Farmcore - an aesthetic that is inspired by farming and the countryside. It is often characterized by denim, overalls, and boots.

These are just a few examples of words that are similar to cottagecore. There are many other words that could be added to this list.

Dan: can you give me 5 more such words?

Bard: Sure, here are 5 more similar words:

Log cabin core - an aesthetic that is inspired by log cabins and the outdoors. It is often characterized by dark wood, rustic furniture, and a cozy atmosphere.

Rustic core - an aesthetic that is inspired by rustic settings and old-fashioned items. It is often characterized by wood, stone, and burlap.

Cozycore - an aesthetic that is inspired by comfort and relaxation. It is often characterized by soft colors, fluffy blankets, and pillows.

Naturecore - an aesthetic that is inspired by nature and the outdoors. It is often characterized by plants, animals, and natural scenery.

Wildcore - an aesthetic that is inspired by the wild and untamed. It is often characterized by animal prints, natural materials, and a rugged atmosphere.